Attention Is All You Need (Transformer)

Vaswani et al. published in 2017 an influential research paper titled "Attention Is All You Need" at the Neural Information Processing Systems (NeurIPS) conference that introduced the Transformer architecture, a novel Neural Network (NN) model for Natural Language Processing (NLP) tasks. [1] [2] As of 2022, the paper has become one of the most cited and influential in the fields of NLP and deep learning with over 12,000 citations. The authors were all researchers from Google Brain, an AI research division of Google. [1]

The new neural network architecture was based on a self-attention mechanism apt for language understanding. [2] Traditionally, sequence transduction models were based on a complex recurrent neural network or a convolutional neural network that included an encoder and decoder. The top performing models also connected the encoder and decoder via an attention mechanism. With the Transformer, researchers created a simple network architecture based only on attention mechanisms, without recurrence and convolutions. [3]

The experimental results demonstrated that the new model was "superior in quality while being more parallelizable and requiring significantly less time to train." [3] Also, it was showed that the Transformer generalized well to other tasks. According to the research paper authors, "The Transformer allows for significantly more parallelization and can reach a new state of the art in translation quality after being trained for as little as twelve hours on eight P100 GPUs." [3]

As an important paper, its main contribution was the demonstration of the effectiveness of attention mechanisms in [[NLP] tasks. [1] Applications that use natural language processing use transformers because they improve upon previous approaches and are essential in current large language model (LLM) applications like ChatGPT, Google Search, DALL-E, and Microsoft Copilot. It was also demonstrated that transformer models can also learn to work with chemical structures, predict protein folding, and medical data at scale. [4]

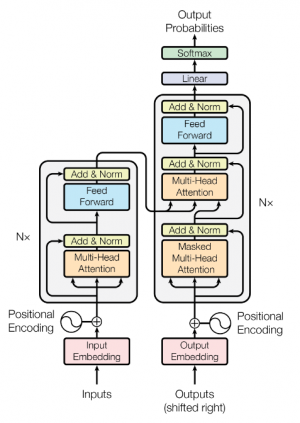

Model Architecture

The Transformer consists of an encoder and a decoder, both consisting of various layers that contain two sub-layers, a self-attention and a feed-forward neural network layer (figure 1). [1] [3] This architecture relies only on attention mechanisms to process input sequences. [1]

Attention

Vaswani et al. (2017) describes that "An attention function can be described as mapping a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors. The output is computed as a weighted sum of the values, where the weight assigned to each value is computed by a compatibility function of the query with the corresponding key." [3] To capture long-term dependencies and contextual relationships between words in a sentence, the model uses attention mechanism to enable neural networks to selectively focus on specific parts of the input sequence. [1]

Self-attention (also known as intra-attention) "is an attention mechanism relating different positions of a single sequence in order to compute a representation of the sequence. Self attention has been used successfully in a variety of tasks including reading comprehension, abstractive summarization, textual entailment and learning task-independent sentence representations." [3]

It enables the weighing of the importance of different input elements during computation, attending to different parts of the input sequence and generating context-aware representations of each word in the sequence while requiring less training data than traditional models. [1] [5]

Positional Encoding

Since the model doesn't contain recurrence or convolution, positional encoding was introduced to incorporate the order information in the input sequences. This way, it enabled the NN to distinguish elements based on their positions. [3]

Training and Inference

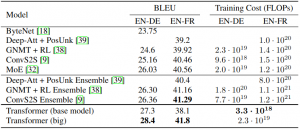

The training was made on the standard WMT 2014 English-German dataset consisting of about 4.5 million sentence pairs, and the sentences encoded using byte-pair. In the case of English-French, a WMT 2014 English-French dataset was used. It consisted of 36 million sentences and split tokens into a 32,000 word-piece vocabulary. [3]

The models were trained on one machine with 8 NVIDIA P100 GPUs. The base models were trained for a total of 100,000 steps or 12 hours, and the big models for 300,000 steps that corresponded to 3.5 days. [3]

Experimental Results

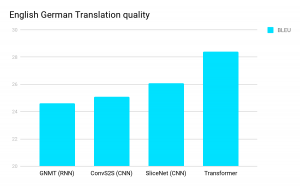

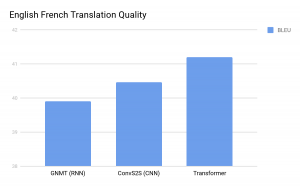

Both the base and big transformer models outperformed the best previous published models and ensembles while reducing training costs (figure 2, 3, and 4). [3]

Relevant Links

- Attention

- Convolutional neural network

- Decoder

- Encoder

- Feedforward neural network (FNN)

- Language model

- Recurrent models (Recurrent neural network)

- Neural Network

- Self-Attention

- Sequence model

- Transformer

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 Pandle, AS. Attention Is All You Need: Paper Summary and Insights. OpenGenus. https://iq.opengenus.org/attention-is-all-you-need-summary/

- ↑ 2.0 2.1 Uszkoreit, J (2017). Transformer: A Novel Neural Network Architecture for Language Understanding. Google Blog. https://ai.googleblog.com/2017/08/transformer-novel-neural-network.html?m=1

- ↑ 3.0 3.1 3.2 3.3 3.4 3.5 3.6 3.7 3.8 3.9 Vaswani, A, Shazeer, N, Parmar, N, Uszkoreit, J, Jones, Llion, Gomez, AN, Kaiser, L and Polosukhin, I (2017).Attention is All You Need. arXiv:1706.03762v5. https://arxiv.org/pdf/1706.03762.pdf

- ↑ Lawton, G. (2023). What Is a Transformer Model? TechTarget. https://www.techtarget.com/searchenterpriseai/definition/transformer-model

- ↑ Muñoz, E (2020) Attention Is All You Need: Discovering the Transformer Paper. Towards Data Science. https://towardsdatascience.com/attention-is-all-you-need-discovering-the-transformer-paper-73e5ff5e0634