Stable Diffusion

Stable Diffusion is a text-to-image latent diffusion model developed by Stability AI, allowing users to generate art in seconds based on their natural language inputs, known as prompts. [1] [2] This model, released to the public with an open-source license, can also perform tasks like inpainting, outpainting, and image translation. [3] [4] Furthermore, it can run on consumer GPUs. [5]

Stable Diffusion is available in different formats: a public demo on HuggingFace, slower and often breaks, a software beta named DreamStudio, which is easier to use, faster but the user is charged after a certain number of image generations, and a full-fat version of the model that everyone can download for unlimited art generation. [5] [6] Third-party developers have contributed to make the software easier to download and use, such as in case of the version for macOS with a simple one-click installer. [6]

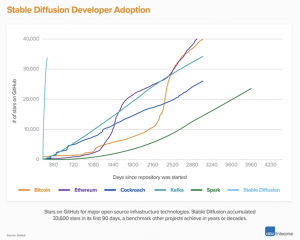

The AI model was funded by Stability AI and released publicly on August 22nd, 2022. [4] It had an immediate impact, being embraced by the AI art community and criticized by traditional artists. It has been analyzed by the general public, sometimes expressing excitement or concerns over the potential of the technology. While AI-generated art has been gaining popularity, Stable Diffusion could propel adoption even further. [6] The program has topped the trending charts of GitHub repositories by a wide margin. [7]

Other image-generation tools are controlled by well-funded companies like OpenAI (DALL-E) or Google (Imagen); however, the open access nature of Stable Diffusion, the ease of use and to build on, and being designed for low computational resources has made its growth surpass that "of any recent technology in infrastructure or crypto" (figure 1). [8]

The open-source model is a key difference between Stable Diffusion and other AI art generators, allowing total access to the model, which even Midjourney, another project outside of Big Tech, doesn't offer. This openness is expected to lead to a faster improvement compared to its rivals. Indeed, on Stable Diffusion's subreddit, users share their favorite prompts and come up with new use cases for the model and its integration into established creative tools. [6]

Stable Diffusion 1

The original Stable Diffusion development and release were led by Patrick Esser (Runway ML) and Robin Romback (Stability AI and Machine Vision & Learning research group at LMU Munich). It was based on their previous work on Latent Diffusion Models at CVPR'22 and combined with support from Eleuther AI, LAION, and Stability AI's team. [1] [9] The model was first released to researchers and then to the public. [10]

Stability AI stated that in cooperation with HuggingFace's legal, ethics, and technology teams the model was released under a Creative ML OpenRAIL-M license, a permissive license that allows for commercial and non-commercial usage. They also developed "an AI-based Safety Classifier included by default in the overall software package" that removes undesirable outputs. Since Stable Diffusion was trained on image-text pairs from a large database taken from the internet, it can reproduce some societal biases and unsafe content. [10]

Stable Diffusion 2

In November 2022, the second version of Stable DIffusion was released, delivering several improvements and features compared to V1. It's also optimized to run on a single GPU, making it accessible to a large number of people. According to Stability AI, new available features like depth2img and higher resolution upscaling capabilities will "serve as the foundation of countless applications and enable an explosion of new creative potential." [9]

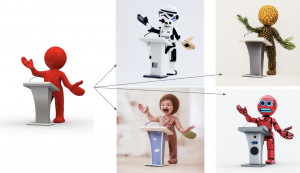

The new depth-guided stable diffusion model (depth2img) extends the image-to-image feature present in the first version (figure 2). This allows the user new creative applications, transforming images while preserving the coherence and depth of the original. [9]

The new version of the model can generate images with a default resolution of 512x512 pixels and 768x768 pixels (figure 3). [9] [11] It includes an Upscaler Diffusion model "that enhances the resolution of images by a factor of 4" (figure 4). For example, it can increase the resolution of an image from 128x128 to 512x512. It can also generate images with very high resolutions such as 2048x2048 and higher. Its Inpainting diffusion model was also updated, simplifying switching parts of an image. [9]

Stable Diffusion 2 was trained using OpenCLIP, a new text encoder developed by LAION, improving the image quality. The training dataset was more diverse and wide-ranging that the first version. Also, adult content was filtered using LAION's NSFW filter. While the training dataset increased image quality, especially in areas like architecture, interior design, wildlife, and landscape scenes, the filter dramatically reduced the number of people in the dataset. However, the filters were later adjusted, still stripping out adult content, but less aggressively. This reduced the number of false positives, resulting in a better balance between the generation of beautiful architectural concepts and natural scenery while also producing good images of people and pop culture. [12]

Released on December 2022, version 2.1 of the model delivered further improvements like better anatomy, hands, and a greater range of art styles. It also supports a new prompting style and the capability to render non-standard resolutions, producing images in extreme aspect ratios (figure 5). [12]

Negative prompts, which allow the user to write what not to generate, eliminating unwanted details like too many fingers or blurry images, have been further improved in 2.1. [12]

Finally, Stability AI stated that "users can prompt the model to have more or less of certain elements in a composition, such as certain colors, objects or properties, using weighted prompts. Starting with a standard prompt and then refining the overall image with prompt weighting to increase or decrease compositional elements gives users greater control over image synthesis." [12]

Architecture

Stable Diffusion is a deep learning (DL) model, a specialized type of machine learning (ML) which in itself is a subset of artificial intelligence (AI). By analyzing and exchanging data across different nodes from datasets—simulating communication between neurons—DL tries to enable computers to match the way humans think. It can analyze unstructured data (image, video, audio, and text) using an artificial neural network (ANN)—a complex structure of algorithms. [3]

Generative AI uses DL algorithms to detect the pattern in the input to produce content like text (GPT-3), images (DALL-E, Midjourney), video or code (Copilot). In diffusion models, two processes take place: forward diffusion and reverse diffusion. During the first, noise is added to the input image, known as Gaussian noise. In the second process, there is noise reversion where the values of the input image pixels are recovered. [3]

Latent Diffusion Model

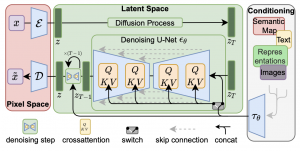

Stability AI uses a Latent Diffusion Model (LDM), a process more efficient than the regular Diffusion Model. A DM (figure 6) deals with large input data, giving rise to struggles in terms of computation issues. LDM solves this by working with compressed data for faster and more efficient computation. Since the data size is smaller, faster image generation is possible. [3]

For the text-to-image system, the capability to control generated imagery by text prompts is necessary. For this end, a DL technique is used, consisting of "concatenating to the noise patch a vector that represents a bit of text, then training the model on a dataset of {image: caption} pairs." [13]

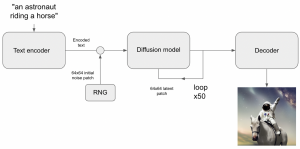

Stable Diffusion's architecture (figure 7) consists mainly of three parts:

- Text encoder;

- Diffusion model;

- Decoder.

The text decoder converts the prompt into a latent vector, the diffusion model repeatedly denoises a 64x64 latent image patch, and the decoder converts the final 64x64 latent path into a 512x512 image. [13]

Training

Stable Diffusion was trained on an aesthetic subset of the LAION-5B dataset created by the DeepFloyd Team at Stability AI. [1] According to Borgi (2022), it was "trained on 512x512 images from a subset of the LAION-5B database. It uses a frozen CLIP ViT-L/14 text encoder to condition the model on text prompts." [4] The model was trained on a 768x768 dataset after version 2.0, supporting greater coherence at higher native resolutions.

Stability AI mentioned in their official blog that the model was trained in their 4,000 A100 Ezra-1 AI ultracluster. [1]

API

Stability AI made available an API of Stable Diffusion with the goal of making the technology as accessible as possible. This option allows developers to integrate AI-assisted image generation into their projects. [14] This is available at Stability's API platform site.

Applications

Stable Diffusion can be used in different areas:

- Photography

- Concept Art

- Architecture

- Fashion

- 3D

- Videogames

- Graphic Design

- Wallpaper

- Cinema [3]

Stable Diffusion options

Hugging Face

Hugging Face hosts a public demo of Stable Diffusion on their website. To use it, it is simply necessary to enter the prompt in the text box and click "Generate image." Afterward, 4 AI-generated images will be provided. [3]

DreamStudio

DreamStudio beta (beta.dreamstudio.ai) is an in-browser graphical interface for Stable Diffusion. As the official team's interface and API, it gives the user more control and faster image generation.

The GUI uses a credit-based system: the user is given a certain amount of free credits; to generate an image costs 0.2 credits. [15] After running out of free credits, the user needs to pay for the membership which costs $10. [3]

Local Machine

As an open-source model, it can be run on Windows, Mac, or Linux computers for free. To run on these operating systems, UnstableFusion—a graphical interface for desktops—is required. Diffusion Bee can also be used but only on Mac computers. [1] A full tutorial on how to install Stable Diffusion locally can be found here.

DiffusionDB

DiffusionDB is a large-scale prompt dataset with 14 million images generated by Stable Diffusion and their respective prompts. With the popularity of image generation based on text prompts, prompt engineering has become a field of study to create images with desired details. The database was constructed by collecting images shared on the public Discord server of Stable Diffusion and released with a CC0 1.0 license. The code that collects and processes the images and prompts was open-sourced. [16]

According to Want et al. (2022), this database can reveal new prompt patterns and help "researchers to systematically investigate diverse prompts and associated images that were previously not possible. Through analyzing the linguistic patterns of prompts, we discover the common prompt patterns and tokens." [16] It can also provide new research directions, creating opportunities for researchers from ML and human-computer interaction areas and in the development of prompt engineering, deepfake detection, and debugging and explaining large generative models. [16]

Differences to other text-to-image AI and concerns

- Figure 8. Comparison between Stable Diffusion, Midjourney, DALL-E and real images. Source: Borgi (2022).

The main differences between Stable Diffusion and other text-to-image models are the open-source approach and a hands-off attitude to the moderation of generated content. While Stability AI's model has some built-in keyword filters, they can be bypassed, especially while running the program on a local machine. The CEO of Stability AI, Emad Mostaque, mentioned that "Ultimately, it’s peoples’ responsibility as to whether they are ethical, moral, and legal in how they operate this technology,” he says. “The bad stuff that people create with it [...] I think it will be a very, very small percentage of the total use.” [6]

Like with other art-generating AI models, Stable Diffusion has also induced discussions over copyrights. Stability AI does not claim any copyright over the images but the database from which the AI model was trained contains copyrighted material. [3] [6] Criticism of tools like Stable Diffusion touch upon the possibility of such tools stealing artists' jobs or their misuse for malicious purposes like misinformation and disinformation. [6]

Borgi (2022) compared the performance of Stable Diffusion, Midjourney, and DALL-E 2 in face generation (figure 8). They discovered that Stability AI's model performed better than the other two in this specific case. [4]

Requirements

- Under 10 GB of VRAM.

- Nvidia GPU (support for AMD GPUs will be added).

- Processor with at least four cores and eight threads or more. [1] [5] [17]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 Stability AI. Stable Diffusion launch announcement. Stability AI. https://stability.ai/blog/stable-diffusion-announcement

- ↑ Stable Diffusion GitHub. Stable Diffusion. GitHub. https://github.com/CompVis/stable-diffusion#stable-diffusion-v1

- ↑ 3.0 3.1 3.2 3.3 3.4 3.5 3.6 3.7 Raj, G (2022). What is Stable Diffusion? A complete guide on how to run, copyright, use cases. Decentralized Creator. https://decentralizedcreator.com/what-is-stable-diffusion-a-complete-guide-on-how-to-run-copyright-use-cases/

- ↑ 4.0 4.1 4.2 4.3 Borji, A (2022). Generated faces in the wild: Quantitative comparison of Stable Diffusion, Midjourneyu and DALL-E 2. arXiv:2210.00586v1

- ↑ 5.0 5.1 5.2 Hachman, M (2022). The new killer app: Creating AI art will absolutely crush your PC. PC World. https://www.pcworld.com/article/916785/creating-ai-art-local-pc-stable-diffusion.html

- ↑ 6.0 6.1 6.2 6.3 6.4 6.5 6.6 Vincent, J (2022). Anyone can use this AI art generator — that’s the risk. The Verge. https://www.theverge.com/2022/9/15/23340673/ai-image-generation-stable-diffusion-explained-ethics-copyright-data

- ↑ Appenzeller, G, Bornstein, M, Casado, M and Li, Y (2022). Art Isn’t Dead, It’s Just Machine-Generated. Andreessen Horowitz. https://a16z.com/2022/11/16/creativity-as-an-app/

- ↑ Andrew (2022). Absolute beginner’s guide to Stable Diffusion AI image. Stable Diffusion Art. https://stable-diffusion-art.com/beginners-guide/

- ↑ 9.0 9.1 9.2 9.3 9.4 Stability AI (2022). Stable Diffusion 2.0 release. Stability AI. https://stability.ai/blog/stable-diffusion-v2-release

- ↑ 10.0 10.1 Stability AI (2022). Stable Diffusion public release. Stability AI. https://stability.ai/blog/stable-diffusion-public-release

- ↑ Wright, A (2022). Stable Diffusion is here, but not everyone's happy. How-to Geek. https://www.howtogeek.com/850931/stable-diffusion-2-is-here-but-not-everyones-happy/

- ↑ 12.0 12.1 12.2 12.3 Stability AI (2022). Stable Diffusion v2.1 and DreamStudio updates. Stability AI. https://stability.ai/blog/stablediffusion2-1-release7-dec-2022

- ↑ 13.0 13.1 Fchollet, Lukewood and Divamgupta (2022). High-performance image generation using Stable Diffusion in KerasCV. TensorFlow. https://www.tensorflow.org/tutorials/generative/generate_images_with_stable_diffusion

- ↑ Stability AI. Stability's API platform. Stability AI. https://stability.ai/blog/api-platform-for-stability-ai

- ↑ Stability AI (2022). DreamStudio beta updates. Stability AI. https://stability.ai/blog/dreamstudio-update-1-dec-2022

- ↑ 16.0 16.1 16.2 Wang, ZJ, Montoya, E, Munechika, D, Yang, H, Hoover, B and Chau, DH (2022). DiffusionDB: A large-scale prompt gallery dataset for text-to-image generative models. arXiv:2210.14896v2

- ↑ Okuha (2022). VRAM requirements for Stable Diffusion. Okuha. https://www.okuha.com/vram-requirements-for-stable-diffusion/