NVIDIA Triton Inference Server: Difference between revisions

(Created page with "NVIDIA Triton Inference Server is an open-source software that standardizes model deployment and execution, providing fast and scalable AI in production environments. As part of the NVIDIA AI platform, Triton enables teams to deploy, run, and scale trained AI models from any framework on GPU- or CPU-based infrastructure, offering high-performance inference across cloud, on-premises, edge, and embedded devices. === Support for Multiple Frameworks === Triton supports...") |

No edit summary |

||

| (5 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

NVIDIA Triton Inference Server is an open-source | {{see also|Model Deployment|artificial intelligence applications}} | ||

== Introduction == | |||

{{#ev:youtube|1kOaYiNVgFs|400|right}} | |||

NVIDIA Triton Inference Server is an open-source solution that streamlines model deployment and execution, delivering fast and scalable AI in production environments. As a component of the NVIDIA AI platform, Triton allows teams to deploy, run, and scale AI models from any framework on GPU- or CPU-based infrastructures, ensuring high-performance inference across cloud, on-premises, edge, and embedded devices. | |||

=== Support for | == Features == | ||

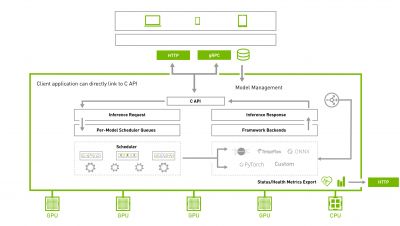

[[File:nvidia triton1.jpg|400px|right]] | |||

=== Support for Diverse Frameworks === | |||

Triton supports | Triton supports numerous training and inference frameworks such as TensorFlow, NVIDIA TensorRT, PyTorch, Python, ONNX, XGBoost, scikit-learn RandomForest, OpenVINO, custom C++, and more. This versatility empowers AI researchers and data scientists to select the most suitable framework for their projects without impacting production deployment. | ||

=== High-Performance Inference === | === High-Performance Inference === | ||

Triton | Triton enables inference on NVIDIA GPU-, x86-, Arm CPU-, and AWS Inferentia-based platforms. By offering dynamic batching, concurrent execution, optimal model configuration, model ensemble, and streaming audio/video inputs, Triton maximizes throughput and resource utilization. | ||

=== | === DevOps and MLOps Integration === | ||

Triton integrates with Kubernetes for orchestration and scaling, exports Prometheus metrics for monitoring, supports live model updates, and is compatible with major public cloud AI and Kubernetes platforms. | Designed with DevOps and MLOps in mind, Triton seamlessly integrates with Kubernetes for orchestration and scaling, exports Prometheus metrics for monitoring, supports live model updates, and is compatible with major public cloud AI and Kubernetes platforms. Many MLOps software solutions also integrate with Triton. | ||

=== | === Model Ensembles and Pipelines Support === | ||

Triton accommodates modern inference requirements, such as multiple models with pre- and post-processing for single queries. It supports model ensembles and pipelines, executing ensemble components on CPU or GPU and utilizing multiple frameworks within the ensemble. | |||

=== | === Accelerating AI in Various Applications === | ||

Triton | Triton delivers high-throughput inference by concurrently executing multiple models on a single GPU or CPU. With dynamic batching, Triton optimizes real-time inferencing under strict latency constraints and supports batch inferencing to maximize GPU and CPU utilization. Furthermore, Triton provides built-in support for audio and video streaming input and enables live updates of models in production without server or application restarts. | ||

== Scalability and Ease | == Scalability and Integration Ease == | ||

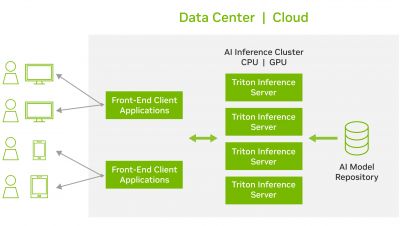

[[File:nvidia triton2.jpg|400px|right]] | |||

Available as a Docker container, Triton easily integrates with Kubernetes for orchestration, metrics, and autoscaling. It supports the standard HTTP/gRPC interface for connections with other applications, such as load balancers, and can scale to any number of servers for handling increasing inference loads for any model. | |||

=== Python Native Support === | |||

PyTriton offers a user-friendly interface for Python developers to utilize Triton Inference Server for serving models, processing functions, or entire inference pipelines. This native support in Python facilitates rapid prototyping and testing of machine learning models while maintaining high hardware utilization. | |||

PyTriton | |||

=== Model Orchestration with Management Service === | === Model Orchestration with Management Service === | ||

Triton | Triton introduces a new model orchestration functionality for efficient multi-model inference. This production service loads models on-demand, unloads inactive models, and allocates GPU resources effectively by placing as many models as possible on a single GPU server. The model orchestration feature is currently in private early access. | ||

=== Large Language Model Inference === | === Large Language Model Inference === | ||

Triton supports inference for large language models | Triton supports inference for large language models like GPT-3 and Megatron, which may not fit on a single GPU. It can partition the model into smaller files and execute each on a separate GPU within or across servers. | ||

=== Optimal Model Configuration with Model Analyzer === | === Optimal Model Configuration with Model Analyzer === | ||

Triton | The Triton Model Analyzer is a tool that automatically evaluates model deployment configurations, such as batch size, precision, and concurrent execution instances on the target processor. It assists in selecting the optimal configuration to meet application quality-of-service (QoS) constraints, reducing time spent on finding the ideal configuration. | ||

=== Tree-Based Model Inference with Forest Inference Library (FIL) Backend === | === Tree-Based Model Inference with Forest Inference Library (FIL) Backend === | ||

The Forest Inference Library (FIL) backend in Triton | The Forest Inference Library (FIL) backend in Triton enables high-performance inference of tree-based models with explainability (SHAP values) on CPUs and GPUs. It supports models from XGBoost, LightGBM, scikit-learn RandomForest, RAPIDS cuML RandomForest, and others in Treelite format. | ||

== Ecosystem Integrations == | == Ecosystem Integrations == | ||

| Line 51: | Line 56: | ||

== Success Stories == | == Success Stories == | ||

Companies such as Amazon, American Express, and Siemens Energy have successfully adopted NVIDIA Triton to enhance customer satisfaction, fraud detection, and physical inspections through AI-based remote monitoring. | |||

== Resources and Support == | == Resources and Support == | ||

NVIDIA provides global enterprise support for Triton through its NVIDIA AI Enterprise offering, which includes guaranteed response times, priority security notifications, regular updates, and access to NVIDIA AI experts. Additional resources, such as whitepapers, on-demand sessions, and blog posts, are available to learn more about Triton and its capabilities. | |||

== NVIDIA LaunchPad == | == NVIDIA LaunchPad == | ||

NVIDIA LaunchPad | NVIDIA LaunchPad offers access to hosted infrastructure and allows users to experience Triton Inference Server through free curated labs. | ||

== Community and Updates == | == Community and Updates == | ||

The Triton community | The Triton community provides a platform for staying current on the latest feature updates, bug fixes, and more. By joining the community, users can connect with other professionals, share experiences, and learn from best practices. | ||

== Extensive Use Cases and Applications == | == Extensive Use Cases and Applications == | ||

| Line 71: | Line 76: | ||

== Developer Documentation and Tutorials == | == Developer Documentation and Tutorials == | ||

NVIDIA | NVIDIA offers comprehensive documentation and tutorials to help developers get started with Triton Inference Server. These resources cover topics like installation, configuration, model deployment, performance optimization, and integration with popular frameworks and services. | ||

== Contributions and Open-Source Development == | == Contributions and Open-Source Development == | ||

| Line 80: | Line 85: | ||

NVIDIA continues to invest in Triton's development, incorporating new features and improvements based on user feedback and industry needs. Upcoming advancements may include additional framework support, improved orchestration capabilities, enhanced performance optimization, and more. | NVIDIA continues to invest in Triton's development, incorporating new features and improvements based on user feedback and industry needs. Upcoming advancements may include additional framework support, improved orchestration capabilities, enhanced performance optimization, and more. | ||

[[Category:Model Deployment]] [[Category:Inference]] [[Category:Servers]] [[Category:DevOps]] | |||

Latest revision as of 16:16, 29 March 2023

- See also: Model Deployment and artificial intelligence applications

Introduction

NVIDIA Triton Inference Server is an open-source solution that streamlines model deployment and execution, delivering fast and scalable AI in production environments. As a component of the NVIDIA AI platform, Triton allows teams to deploy, run, and scale AI models from any framework on GPU- or CPU-based infrastructures, ensuring high-performance inference across cloud, on-premises, edge, and embedded devices.

Features

Support for Diverse Frameworks

Triton supports numerous training and inference frameworks such as TensorFlow, NVIDIA TensorRT, PyTorch, Python, ONNX, XGBoost, scikit-learn RandomForest, OpenVINO, custom C++, and more. This versatility empowers AI researchers and data scientists to select the most suitable framework for their projects without impacting production deployment.

High-Performance Inference

Triton enables inference on NVIDIA GPU-, x86-, Arm CPU-, and AWS Inferentia-based platforms. By offering dynamic batching, concurrent execution, optimal model configuration, model ensemble, and streaming audio/video inputs, Triton maximizes throughput and resource utilization.

DevOps and MLOps Integration

Designed with DevOps and MLOps in mind, Triton seamlessly integrates with Kubernetes for orchestration and scaling, exports Prometheus metrics for monitoring, supports live model updates, and is compatible with major public cloud AI and Kubernetes platforms. Many MLOps software solutions also integrate with Triton.

Model Ensembles and Pipelines Support

Triton accommodates modern inference requirements, such as multiple models with pre- and post-processing for single queries. It supports model ensembles and pipelines, executing ensemble components on CPU or GPU and utilizing multiple frameworks within the ensemble.

Accelerating AI in Various Applications

Triton delivers high-throughput inference by concurrently executing multiple models on a single GPU or CPU. With dynamic batching, Triton optimizes real-time inferencing under strict latency constraints and supports batch inferencing to maximize GPU and CPU utilization. Furthermore, Triton provides built-in support for audio and video streaming input and enables live updates of models in production without server or application restarts.

Scalability and Integration Ease

Available as a Docker container, Triton easily integrates with Kubernetes for orchestration, metrics, and autoscaling. It supports the standard HTTP/gRPC interface for connections with other applications, such as load balancers, and can scale to any number of servers for handling increasing inference loads for any model.

Python Native Support

PyTriton offers a user-friendly interface for Python developers to utilize Triton Inference Server for serving models, processing functions, or entire inference pipelines. This native support in Python facilitates rapid prototyping and testing of machine learning models while maintaining high hardware utilization.

Model Orchestration with Management Service

Triton introduces a new model orchestration functionality for efficient multi-model inference. This production service loads models on-demand, unloads inactive models, and allocates GPU resources effectively by placing as many models as possible on a single GPU server. The model orchestration feature is currently in private early access.

Large Language Model Inference

Triton supports inference for large language models like GPT-3 and Megatron, which may not fit on a single GPU. It can partition the model into smaller files and execute each on a separate GPU within or across servers.

Optimal Model Configuration with Model Analyzer

The Triton Model Analyzer is a tool that automatically evaluates model deployment configurations, such as batch size, precision, and concurrent execution instances on the target processor. It assists in selecting the optimal configuration to meet application quality-of-service (QoS) constraints, reducing time spent on finding the ideal configuration.

Tree-Based Model Inference with Forest Inference Library (FIL) Backend

The Forest Inference Library (FIL) backend in Triton enables high-performance inference of tree-based models with explainability (SHAP values) on CPUs and GPUs. It supports models from XGBoost, LightGBM, scikit-learn RandomForest, RAPIDS cuML RandomForest, and others in Treelite format.

Ecosystem Integrations

Triton is supported by a variety of cloud platforms and services, including Alibaba Cloud, Amazon Elastic Kubernetes Service (EKS), Amazon Elastic Container Service (ECS), Amazon SageMaker, Google Kubernetes Engine (GKE), Google Vertex AI, HPE Ezmeral, Microsoft Azure Kubernetes Service (AKS), Azure Machine Learning, and Oracle Cloud Infrastructure Data Science Platform.

Success Stories

Companies such as Amazon, American Express, and Siemens Energy have successfully adopted NVIDIA Triton to enhance customer satisfaction, fraud detection, and physical inspections through AI-based remote monitoring.

Resources and Support

NVIDIA provides global enterprise support for Triton through its NVIDIA AI Enterprise offering, which includes guaranteed response times, priority security notifications, regular updates, and access to NVIDIA AI experts. Additional resources, such as whitepapers, on-demand sessions, and blog posts, are available to learn more about Triton and its capabilities.

NVIDIA LaunchPad

NVIDIA LaunchPad offers access to hosted infrastructure and allows users to experience Triton Inference Server through free curated labs.

Community and Updates

The Triton community provides a platform for staying current on the latest feature updates, bug fixes, and more. By joining the community, users can connect with other professionals, share experiences, and learn from best practices.

Extensive Use Cases and Applications

NVIDIA Triton is widely used across various industries and applications, such as healthcare, finance, retail, manufacturing, and logistics. It accelerates workloads for speech recognition, recommender systems, medical imaging, natural language processing, and more.

Developer Documentation and Tutorials

NVIDIA offers comprehensive documentation and tutorials to help developers get started with Triton Inference Server. These resources cover topics like installation, configuration, model deployment, performance optimization, and integration with popular frameworks and services.

Contributions and Open-Source Development

As an open-source project, NVIDIA encourages contributions from developers and researchers to enhance Triton's capabilities, performance, and stability. By actively participating in the project's development, users can shape the future of AI inference and model deployment.

Future Developments

NVIDIA continues to invest in Triton's development, incorporating new features and improvements based on user feedback and industry needs. Upcoming advancements may include additional framework support, improved orchestration capabilities, enhanced performance optimization, and more.