Vector embeddings: Difference between revisions

No edit summary |

No edit summary |

||

| (18 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{see also|AI terms}} | |||

==Introduction== | ==Introduction== | ||

Vector embeddings are | [[Vector embeddings]] are lists of numbers used to represent complex data like [[text]], [[images]], or [[audio]] in a numerical format enabling [[machine learning algorithms]] to process them. These embeddings translate [[semantic similarity]] between objects into proximity within a [[vector space]], making them suitable for tasks such as [[clustering]], [[recommendation]], and [[classification]]. [[Clustering algorithms]] group similar points together, [[recommendation systems]] find similar objects, and [[classification tasks]] determine the label of an object based on its most similar counterparts. | ||

==Understanding Vector Embeddings== | ==Understanding Vector Embeddings== | ||

In the context of text data, words with similar meanings, such as " | ===Dog and Puppy Example=== | ||

In the context of text data, words with similar meanings, such as "dog" and "puppy", must be represented to capture their [[semantic similarity]]. [[Vector representation]]s achieve this by transforming data objects into arrays of real numbers with a fixed length, typically ranging from hundreds to thousands of elements. These arrays are generated by machine learning models through a process called [[vectorization]]. | |||

For instance, the words " | For instance, the words "dog" and "puppy" may be vectorized as follows: | ||

< | <poem style="border: 1px solid; padding: 1rem"> | ||

dog = [1.5, -0.4, 7.2, 19.6, 3.1, ..., 20.2] | |||

puppy = [1.5, -0.4, 7.2, 19.5, 3.2, ..., 20.8] | |||

</ | </poem> | ||

These vectors exhibit a high similarity, while vectors for words like "banjo" or "comedy" would not be similar to either of these. In this way, vector embeddings capture the semantic similarity of words. The specific meaning of each number in a vector depends on the machine learning model that generated the vectors, and is not always clear in terms of human understanding of language and meaning. | These vectors exhibit a high similarity, while vectors for words like "banjo" or "comedy" would not be similar to either of these. In this way, vector embeddings capture the semantic similarity of words. The specific meaning of each number in a vector depends on the machine learning model that generated the vectors, and is not always clear in terms of human understanding of language and meaning. | ||

===King and Queen Example=== | |||

Vector-based representation of meaning has gained attention due to its ability to perform mathematical operations between words, revealing semantic relationships. A famous example is: | Vector-based representation of meaning has gained attention due to its ability to perform mathematical operations between words, revealing semantic relationships. A famous example is: | ||

| Line 22: | Line 25: | ||

This result suggests that the difference between "king" and "man" represents some sort of "royalty", which is analogously applicable to "queen" minus "woman". Various concepts, such as "woman", "girl", "boy", etc., can be vectorized into arrays of numbers, often referred to as dimensions. These arrays can be visualized and correlated to familiar words, giving insight into their meaning. | This result suggests that the difference between "king" and "man" represents some sort of "royalty", which is analogously applicable to "queen" minus "woman". Various concepts, such as "woman", "girl", "boy", etc., can be vectorized into arrays of numbers, often referred to as dimensions. These arrays can be visualized and correlated to familiar words, giving insight into their meaning. | ||

===Example of 5 Words=== | |||

Objects (data): words such as kitty, puppy, orange, blueberry, structure, motorbike | |||

Search term: fruit | |||

A basic set of vector embeddings (limited to 5 dimensions) for the objects and the search term might appear as follows: | |||

Vector embeddings can be generated for various media types, such as text, images, audio, and others. For text, vectorization techniques have significantly evolved over the last decade, from word2vec (2013) to the state-of-the-art transformer models era, which began with the release of BERT in 2018. | {| class="wikitable" | ||

! Word !! Vector Embedding | |||

|- | |||

| kitty || [1.5, -0.4, 7.2, 19.6, 20.2] | |||

|- | |||

| puppy || [1.7, -0.3, 6.9, 19.1, 21.1] | |||

|- | |||

| orange || [-5.2, 3.1, 0.2, 8.1, 3.5] | |||

|- | |||

| blueberry || [-4.9, 3.6, 0.9, 7.8, 3.6] | |||

|- | |||

| strcuture || [60.1, -60.3, 10, -12.3, 9.2] | |||

|- | |||

| motorbike || [81.6, -72.1, 16, -20.2, 102] | |||

|- | |||

| fruit || [-5.1, 2.9, 0.8, 7.9, 3.1] | |||

|} | |||

Upon examining each of the 5 components of the vectors, it's evident that kitty and puppy are much closer than puppy and orange (we don't even have to determine the distances). Similarly, fruit is significantly closer to orange and blueberry compared to the other words, making them the top results for the "fruit" search. | |||

===More Than Just Words=== | |||

Vector embeddings can represent more than just word meanings. They can effectively be generated from any data object, including [[text]], [[images]], [[audio]], [[time series data]], [[3D models]], [[video]], and [[molecules]]. Embeddings are constructed such that two objects with similar semantics have vectors that are "close" to each other in vector space, with a "small" distance between them. | |||

==Creating Vector Embeddings== | |||

===Feature Engineering=== | |||

One method for creating vector embeddings involves engineering the vector values using [[domain knowledge]], a process known as [[feature engineering]]. For instance, in medical imaging, domain expertise is employed to quantify features such as shape, color, and regions within an image to capture semantics. However, feature engineering requires domain knowledge and is often too costly to scale. | |||

===Machine Learning Models=== | |||

Rather than engineering vector embeddings, [[models]] are frequently trained to translate objects into vectors. [[Deep neural network]]s are commonly used for training such models. The resulting embeddings are typically [[high-dimensional]] (up to two thousand dimensions) and [[dense]] (all values are non-zero). Text data can be transformed into vector embeddings using models such as [[Word2Vec]], [[GLoVE]], and [[BERT]]. Images can be embedded using [[convolutional neural network]]s ([[CNN]]s) like [[VGG]] and [[Inception]], while audio recordings can be converted into vectors using [[image embedding transformation]]s over their visual representations, such as [[spectrogram]]s. | |||

==Generating Vector Embeddings Using ML Models== | |||

The primary aspect of vector search's effectiveness lies in generating embeddings for each [[entity]] and [[query]]. The secondary aspect is efficiently searching within very large [[dataset]]s. | |||

Vector embeddings can be generated for various media types, such as text, images, audio, and others. For text, vectorization techniques have significantly evolved over the last decade, from [[word2vec]] (2013) to the state-of-the-art [[transformer]] models era, which began with the release of [[BERT]] in 2018. | |||

===Word-level Dense Vector Models (word2vec, GloVe, etc.)=== | ===Word-level Dense Vector Models (word2vec, GloVe, etc.)=== | ||

word2vec is a group of model architectures that introduced the concept of | [[word2vec]] is a group of model architectures that introduced the concept of [[dense]] vectors in language processing, in which all values are non-zero. It uses a [[neural network]] model to learn word associations from a large text corpus. The model first creates a vocabulary from the corpus and then learns vector representations for the words, usually with 300 dimensions. Words found in similar contexts have vector representations that are close in vector space. | ||

However, word2vec suffers from limitations, including its inability to address words with multiple meanings (polysemantic) and words with ambiguous meanings. | However, word2vec suffers from limitations, including its inability to address words with multiple meanings ([[polysemantic]]) and words with ambiguous meanings. | ||

===Transformer Models (BERT, ELMo, and others)=== | ===Transformer Models (BERT, ELMo, and others)=== | ||

The current state-of-the-art models are based on the transformer architecture. Models like BERT and its successors improve search accuracy, precision, and recall by examining the context of each word to create full contextual embeddings. Unlike word2vec embeddings, which are context-agnostic, transformer-generated embeddings consider the entire input text. Each occurrence of a word has its own embedding that is influenced by the surrounding text, better reflecting the polysemantic nature of words, which can only be disambiguated when considered in context. | The current state-of-the-art models are based on the [[transformer architecture]]. Models like [[BERT]] and its successors improve search accuracy, precision, and recall by examining the context of each word to create full contextual embeddings. Unlike [[word2vec embeddings]], which are context-agnostic, [[transformer-generated embeddings]] consider the entire input text. Each occurrence of a word has its own embedding that is influenced by the surrounding text, better reflecting the [[polysemantic]] nature of words, which can only be disambiguated when considered in context. | ||

Some potential downsides of transformer models include: | Some potential downsides of transformer models include: | ||

Increased compute requirements: Fine-tuning transformer models is much slower (taking hours instead of minutes). | *Increased compute requirements: Fine-tuning transformer models is much slower (taking hours instead of minutes). | ||

Increased memory requirements: Context-sensitivity greatly increases memory requirements, often leading to limitations on possible input lengths. | *Increased memory requirements: Context-sensitivity greatly increases memory requirements, often leading to limitations on possible input lengths. | ||

Despite these drawbacks, transformer models have been incredibly successful, leading to a proliferation of text vectorizer models for various data types such as audio, video, and images. Some models, like CLIP, can vectorize multiple data types (e.g., images and text) into a single vector space, enabling content-based image searches using only text. | |||

Despite these drawbacks, [[transformer models]] have been incredibly successful, leading to a proliferation of text vectorizer models for various data types such as audio, video, and images. Some models, like [[CLIP]], can vectorize multiple data types (e.g., images and text) into a single vector space, enabling content-based image searches using only text. | |||

==Creating Vector Embeddings for Non-text Media== | |||

In addition to text, vector embeddings can be created for various types of data, such as [[images]] and [[audio]] recordings. Images can be embedded using [[convolutional neural network]]s ([[CNN]]s) like [[VGG]] and [[Inception]], while audio recordings can be converted into vectors using [[image embedding transformation]]s over their [[visual representation]]s, such as [[spectrograms]]. | |||

===Example: Image Embedding with a Convolutional Neural Network=== | |||

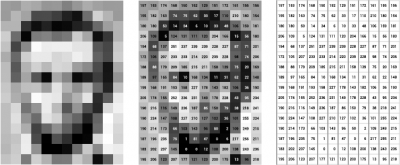

[[File:image embedding with cnn1.png|400px|right]] | |||

In this example, raw images are represented as greyscale pixels, which correspond to a matrix of integer values ranging from 0 to 255, where 0 signifies black and 255 represents white. The matrix values define a vector embedding, with the first coordinate being the matrix's upper-left cell and the last coordinate corresponding to the lower-right matrix cell. | |||

While such embeddings effectively maintain the semantic information of a pixel's neighborhood in an image, they are highly sensitive to transformations like [[shifts]], [[scaling]], [[cropping]], and other [[image manipulation]] operations. Consequently, they are often used as raw inputs to learn more robust embeddings. | |||

A Convolutional Neural Network (CNN or [[ConvNet]]) is a class of [[deep learning architecture]]s typically applied to visual data, transforming images into embeddings. CNNs process input through hierarchical small local sub-inputs known as receptive fields. Each neuron in each network layer processes a specific receptive field from the previous layer. Each layer either applies a convolution on the receptive field or reduces the input size through a process called subsampling. | |||

A typical CNN structure includes receptive fields as sub-squares in each layer, serving as input to a single [[neuron]] within the preceding layer. Subsampling operations reduce layer size, while convolution operations expand layer size. The resulting vector embedding is obtained through a fully connected layer. | |||

Learning the network [[weights]] (i.e., the embedding model) requires a large set of [[label]]ed images. The weights are optimized to ensure that images with the same labels have closer embeddings compared to those with different labels. Once the CNN embedding model is learned, images can be transformed into vectors and stored with a [[K-Nearest-Neighbor]] index. For a new unseen image, it can be transformed using the CNN model, its [[k-most similar vector]]s can be retrieved, and the corresponding similar images can be identified. | |||

Although this example focuses on images and CNNs, vector embeddings can be created for various types of data, and multiple models or methods can be employed to generate them. | |||

==Using Vector Embeddings== | |||

Vector embeddings' ability to represent objects as dense vectors containing their semantic information makes them highly valuable for a wide array of machine learning applications. | |||

One of the most popular uses of vector embeddings is [[similarity search]]. Search algorithms like [[KNN]] and [[ANN]] necessitate calculating distances between vectors to determine similarity. Vector embeddings can be used to compute these distances. Nearest neighbor search can then be utilized for tasks such as [[deduplication]], [[recommendations]], [[anomaly detection]], and [[reverse image search]]. | |||

Even if embeddings are not directly used for an application, many popular machine learning models and methods rely on them internally. For instance, in [[encoder-decoder architectures]], the embeddings generated by the encoder contain the required information for the decoder to produce a result. This architecture is widely employed in applications like [[machine translation]] and [[caption generation]]. | |||

===Products=== | |||

[[Vector database]] | |||

==Explain {{PAGENAME}} Like I'm 5 (ELI5)== | |||

Imagine you have a box of different toys like cars, dolls, and balls. Now, we want to sort these toys based on how similar they are. We can use something called "vector embedding" to help us with this. Vector embedding is like giving each toy a secret code made of numbers. Toys that are similar will have secret codes that are very close to each other, and toys that are not similar will have secret codes that are very different. | |||

For example, let's say we have a red car, a blue car, and a doll. We can give them secret codes like this: | |||

<poem style="border: 1px solid; padding: 1rem"> | |||

Red car: [1, 2, 3] | |||

Blue car: [1, 2, 4] | |||

Doll: [5, 6, 7] | |||

</poem> | |||

See how the red car and the blue car have secret codes that are very close to each other, while the doll has a different secret code? That's because the cars are more similar to each other than the doll. | |||

Vector embedding can also be used for words, pictures, sounds, and many other things. It helps computers understand and sort these things by how similar they are, just like we sorted the toys! | |||

[[Category:Terms]] [[Category:Artificial intelligence terms]] | |||

Latest revision as of 17:39, 8 April 2023

- See also: AI terms

Introduction

Vector embeddings are lists of numbers used to represent complex data like text, images, or audio in a numerical format enabling machine learning algorithms to process them. These embeddings translate semantic similarity between objects into proximity within a vector space, making them suitable for tasks such as clustering, recommendation, and classification. Clustering algorithms group similar points together, recommendation systems find similar objects, and classification tasks determine the label of an object based on its most similar counterparts.

Understanding Vector Embeddings

Dog and Puppy Example

In the context of text data, words with similar meanings, such as "dog" and "puppy", must be represented to capture their semantic similarity. Vector representations achieve this by transforming data objects into arrays of real numbers with a fixed length, typically ranging from hundreds to thousands of elements. These arrays are generated by machine learning models through a process called vectorization.

For instance, the words "dog" and "puppy" may be vectorized as follows:

dog = [1.5, -0.4, 7.2, 19.6, 3.1, ..., 20.2]

puppy = [1.5, -0.4, 7.2, 19.5, 3.2, ..., 20.8]

These vectors exhibit a high similarity, while vectors for words like "banjo" or "comedy" would not be similar to either of these. In this way, vector embeddings capture the semantic similarity of words. The specific meaning of each number in a vector depends on the machine learning model that generated the vectors, and is not always clear in terms of human understanding of language and meaning.

King and Queen Example

Vector-based representation of meaning has gained attention due to its ability to perform mathematical operations between words, revealing semantic relationships. A famous example is:

"king − man + woman ≈ queen"

This result suggests that the difference between "king" and "man" represents some sort of "royalty", which is analogously applicable to "queen" minus "woman". Various concepts, such as "woman", "girl", "boy", etc., can be vectorized into arrays of numbers, often referred to as dimensions. These arrays can be visualized and correlated to familiar words, giving insight into their meaning.

Example of 5 Words

Objects (data): words such as kitty, puppy, orange, blueberry, structure, motorbike

Search term: fruit

A basic set of vector embeddings (limited to 5 dimensions) for the objects and the search term might appear as follows:

| Word | Vector Embedding |

|---|---|

| kitty | [1.5, -0.4, 7.2, 19.6, 20.2] |

| puppy | [1.7, -0.3, 6.9, 19.1, 21.1] |

| orange | [-5.2, 3.1, 0.2, 8.1, 3.5] |

| blueberry | [-4.9, 3.6, 0.9, 7.8, 3.6] |

| strcuture | [60.1, -60.3, 10, -12.3, 9.2] |

| motorbike | [81.6, -72.1, 16, -20.2, 102] |

| fruit | [-5.1, 2.9, 0.8, 7.9, 3.1] |

Upon examining each of the 5 components of the vectors, it's evident that kitty and puppy are much closer than puppy and orange (we don't even have to determine the distances). Similarly, fruit is significantly closer to orange and blueberry compared to the other words, making them the top results for the "fruit" search.

More Than Just Words

Vector embeddings can represent more than just word meanings. They can effectively be generated from any data object, including text, images, audio, time series data, 3D models, video, and molecules. Embeddings are constructed such that two objects with similar semantics have vectors that are "close" to each other in vector space, with a "small" distance between them.

Creating Vector Embeddings

Feature Engineering

One method for creating vector embeddings involves engineering the vector values using domain knowledge, a process known as feature engineering. For instance, in medical imaging, domain expertise is employed to quantify features such as shape, color, and regions within an image to capture semantics. However, feature engineering requires domain knowledge and is often too costly to scale.

Machine Learning Models

Rather than engineering vector embeddings, models are frequently trained to translate objects into vectors. Deep neural networks are commonly used for training such models. The resulting embeddings are typically high-dimensional (up to two thousand dimensions) and dense (all values are non-zero). Text data can be transformed into vector embeddings using models such as Word2Vec, GLoVE, and BERT. Images can be embedded using convolutional neural networks (CNNs) like VGG and Inception, while audio recordings can be converted into vectors using image embedding transformations over their visual representations, such as spectrograms.

Generating Vector Embeddings Using ML Models

The primary aspect of vector search's effectiveness lies in generating embeddings for each entity and query. The secondary aspect is efficiently searching within very large datasets.

Vector embeddings can be generated for various media types, such as text, images, audio, and others. For text, vectorization techniques have significantly evolved over the last decade, from word2vec (2013) to the state-of-the-art transformer models era, which began with the release of BERT in 2018.

Word-level Dense Vector Models (word2vec, GloVe, etc.)

word2vec is a group of model architectures that introduced the concept of dense vectors in language processing, in which all values are non-zero. It uses a neural network model to learn word associations from a large text corpus. The model first creates a vocabulary from the corpus and then learns vector representations for the words, usually with 300 dimensions. Words found in similar contexts have vector representations that are close in vector space.

However, word2vec suffers from limitations, including its inability to address words with multiple meanings (polysemantic) and words with ambiguous meanings.

Transformer Models (BERT, ELMo, and others)

The current state-of-the-art models are based on the transformer architecture. Models like BERT and its successors improve search accuracy, precision, and recall by examining the context of each word to create full contextual embeddings. Unlike word2vec embeddings, which are context-agnostic, transformer-generated embeddings consider the entire input text. Each occurrence of a word has its own embedding that is influenced by the surrounding text, better reflecting the polysemantic nature of words, which can only be disambiguated when considered in context.

Some potential downsides of transformer models include:

- Increased compute requirements: Fine-tuning transformer models is much slower (taking hours instead of minutes).

- Increased memory requirements: Context-sensitivity greatly increases memory requirements, often leading to limitations on possible input lengths.

Despite these drawbacks, transformer models have been incredibly successful, leading to a proliferation of text vectorizer models for various data types such as audio, video, and images. Some models, like CLIP, can vectorize multiple data types (e.g., images and text) into a single vector space, enabling content-based image searches using only text.

Creating Vector Embeddings for Non-text Media

In addition to text, vector embeddings can be created for various types of data, such as images and audio recordings. Images can be embedded using convolutional neural networks (CNNs) like VGG and Inception, while audio recordings can be converted into vectors using image embedding transformations over their visual representations, such as spectrograms.

Example: Image Embedding with a Convolutional Neural Network

In this example, raw images are represented as greyscale pixels, which correspond to a matrix of integer values ranging from 0 to 255, where 0 signifies black and 255 represents white. The matrix values define a vector embedding, with the first coordinate being the matrix's upper-left cell and the last coordinate corresponding to the lower-right matrix cell.

While such embeddings effectively maintain the semantic information of a pixel's neighborhood in an image, they are highly sensitive to transformations like shifts, scaling, cropping, and other image manipulation operations. Consequently, they are often used as raw inputs to learn more robust embeddings.

A Convolutional Neural Network (CNN or ConvNet) is a class of deep learning architectures typically applied to visual data, transforming images into embeddings. CNNs process input through hierarchical small local sub-inputs known as receptive fields. Each neuron in each network layer processes a specific receptive field from the previous layer. Each layer either applies a convolution on the receptive field or reduces the input size through a process called subsampling.

A typical CNN structure includes receptive fields as sub-squares in each layer, serving as input to a single neuron within the preceding layer. Subsampling operations reduce layer size, while convolution operations expand layer size. The resulting vector embedding is obtained through a fully connected layer.

Learning the network weights (i.e., the embedding model) requires a large set of labeled images. The weights are optimized to ensure that images with the same labels have closer embeddings compared to those with different labels. Once the CNN embedding model is learned, images can be transformed into vectors and stored with a K-Nearest-Neighbor index. For a new unseen image, it can be transformed using the CNN model, its k-most similar vectors can be retrieved, and the corresponding similar images can be identified.

Although this example focuses on images and CNNs, vector embeddings can be created for various types of data, and multiple models or methods can be employed to generate them.

Using Vector Embeddings

Vector embeddings' ability to represent objects as dense vectors containing their semantic information makes them highly valuable for a wide array of machine learning applications.

One of the most popular uses of vector embeddings is similarity search. Search algorithms like KNN and ANN necessitate calculating distances between vectors to determine similarity. Vector embeddings can be used to compute these distances. Nearest neighbor search can then be utilized for tasks such as deduplication, recommendations, anomaly detection, and reverse image search.

Even if embeddings are not directly used for an application, many popular machine learning models and methods rely on them internally. For instance, in encoder-decoder architectures, the embeddings generated by the encoder contain the required information for the decoder to produce a result. This architecture is widely employed in applications like machine translation and caption generation.

Products

Explain Vector embeddings Like I'm 5 (ELI5)

Imagine you have a box of different toys like cars, dolls, and balls. Now, we want to sort these toys based on how similar they are. We can use something called "vector embedding" to help us with this. Vector embedding is like giving each toy a secret code made of numbers. Toys that are similar will have secret codes that are very close to each other, and toys that are not similar will have secret codes that are very different.

For example, let's say we have a red car, a blue car, and a doll. We can give them secret codes like this:

Red car: [1, 2, 3]

Blue car: [1, 2, 4]

Doll: [5, 6, 7]

See how the red car and the blue car have secret codes that are very close to each other, while the doll has a different secret code? That's because the cars are more similar to each other than the doll.

Vector embedding can also be used for words, pictures, sounds, and many other things. It helps computers understand and sort these things by how similar they are, just like we sorted the toys!