Q* OpenAI: Difference between revisions

No edit summary |

No edit summary |

||

| (10 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

==Introduction== | What is Q* (pronounced Q-star) from [[OpenAI]]? | ||

#It is the AI breakthrough that will lead to [[AGI]] ([[Artificial general intelligence]])? | |||

#Did it cause the board to OpenAI to fire it's CEO [[Sam Altman]]? | |||

#Is it able to reason logically and solve math problems? | |||

#Is it able to perform [[self-improvement]]? | |||

==Sam Altman's Response== | |||

===Interview Question by Verge=== | |||

The reports about the Q* model breakthrough that you all recently made, what’s going on there? | |||

===Altman Response=== | |||

No particular comment on that unfortunate leak. But what we have been saying — two weeks ago, what we are saying today, what we’ve been saying a year ago, what we were saying earlier on — is that we expect progress in this technology to continue to be rapid, and also that we expect to continue to work very hard to figure out how to make it safe and beneficial. That’s why we got up every day before. That’s why we will get up every day in the future. I think we have been extraordinarily consistent on that. | |||

Without commenting on any specific thing or project or whatever, we believe that progress is research. You can always hit a wall, but we expect that progress will continue to be significant. And we want to engage with the world about that and figure out how to make this as good as we possibly can.<ref name="”1”">Verge Interview https://www.theverge.com/2023/11/29/23982046/sam-altman-interview-openai-ceo-rehired</ref> | |||

==Theory== | |||

===Introduction=== | |||

The recent developments in [[artificial intelligence]], specifically in the realm of [[machine learning]] and [[deep learning]], have brought forth a new concept: the [[Q* hypothesis]]. This idea revolves around the integration of [[tree-of-thoughts reasoning]], [[process reward models]], and the innovative use of [[synthetic data]] to enhance machine learning models. | The recent developments in [[artificial intelligence]], specifically in the realm of [[machine learning]] and [[deep learning]], have brought forth a new concept: the [[Q* hypothesis]]. This idea revolves around the integration of [[tree-of-thoughts reasoning]], [[process reward models]], and the innovative use of [[synthetic data]] to enhance machine learning models. | ||

==Background== | ===Background=== | ||

The Q* hypothesis, pronounced as Q-Star, is a concept that emerged from the [[artificial intelligence]] research community, particularly from [[OpenAI]]. The idea is a hybrid of various methodologies in [[machine learning]] and [[artificial intelligence]], such as [[Q-learning]], [[A* search algorithm]], and others. Q* is seen as a potential breakthrough in the quest for [[Artificial General Intelligence]] (AGI), which aims to create autonomous systems that can outperform humans in most economically valuable tasks. | The Q* hypothesis, pronounced as Q-Star, is a concept that emerged from the [[artificial intelligence]] research community, particularly from [[OpenAI]]. The idea is a hybrid of various methodologies in [[machine learning]] and [[artificial intelligence]], such as [[Q-learning]], [[A* search algorithm]], and others. Q* is seen as a potential breakthrough in the quest for [[Artificial General Intelligence]] (AGI), which aims to create autonomous systems that can outperform humans in most economically valuable tasks. | ||

==Tree-of-Thoughts Reasoning== | ===Tree-of-Thoughts Reasoning=== | ||

===Concept and Implementation=== | ====Concept and Implementation==== | ||

[[Tree-of-thoughts reasoning]] (ToT) is a novel approach in language model prompting. It involves the creation of a tree of reasoning paths, which may converge to a correct answer. This method is a significant step in advancing the capabilities of language models. By breaking down reasoning into chunks and prompting the model to generate new reasoning steps, ToT facilitates a more structured and efficient problem-solving process. | [[Tree-of-thoughts reasoning]] (ToT) is a novel approach in language model prompting. It involves the creation of a tree of reasoning paths, which may converge to a correct answer. This method is a significant step in advancing the capabilities of language models. By breaking down reasoning into chunks and prompting the model to generate new reasoning steps, ToT facilitates a more structured and efficient problem-solving process. | ||

===Comparison with Other Methods=== | ====Comparison with Other Methods==== | ||

ToT stands out from other problem-solving techniques with language models due to its recursive nature. This approach is akin to the concerns of [[AI Safety]] regarding recursively self-improving models. The ToT method scores each vertex or node in the reasoning tree, allowing for a more nuanced evaluation of the reasoning process. This technique aligns with the principles of [[Reinforcement Learning from Human Feedback]] (RLHF), as it allows for scoring of individual steps rather than entire completions. | ToT stands out from other problem-solving techniques with language models due to its recursive nature. This approach is akin to the concerns of [[AI Safety]] regarding recursively self-improving models. The ToT method scores each vertex or node in the reasoning tree, allowing for a more nuanced evaluation of the reasoning process. This technique aligns with the principles of [[Reinforcement Learning from Human Feedback]] (RLHF), as it allows for scoring of individual steps rather than entire completions. | ||

==Process Reward Models (PRM)== | ===Process Reward Models (PRM)=== | ||

[[PRMs]] represent a critical shift in the way [[reinforcement learning]] and human feedback are utilized in language models. Traditional methods score the entire response from a language model, but PRMs assign a score to each step of reasoning. This fine-grained approach facilitates better understanding and optimization of language models. PRMs have been a topic of interest in the AI research community, with their application being essential in advancing the field of language model reasoning, particularly in complex tasks like mathematical problem-solving. | [[PRMs]] represent a critical shift in the way [[reinforcement learning]] and human feedback are utilized in language models. Traditional methods score the entire response from a language model, but PRMs assign a score to each step of reasoning. This fine-grained approach facilitates better understanding and optimization of language models. PRMs have been a topic of interest in the AI research community, with their application being essential in advancing the field of language model reasoning, particularly in complex tasks like mathematical problem-solving. | ||

==Supercharging Synthetic Data== | ===Supercharging Synthetic Data=== | ||

The use of [[synthetic data]] in AI research has been gaining traction. This method involves creating large datasets through process supervision or similar techniques. Synthetic data is pivotal in training AI models, as it provides a vast and diverse range of scenarios for the models to learn from. The use of synthetic data, coupled with the advancements in ToT and PRM, paves the way for more sophisticated and capable AI systems. | The use of [[synthetic data]] in AI research has been gaining traction. This method involves creating large datasets through process supervision or similar techniques. Synthetic data is pivotal in training AI models, as it provides a vast and diverse range of scenarios for the models to learn from. The use of synthetic data, coupled with the advancements in ToT and PRM, paves the way for more sophisticated and capable AI systems. | ||

==Q* Hypothesis in Practice== | ===Q* Hypothesis in Practice=== | ||

The practical application of the Q* hypothesis involves using PRMs to score ToT reasoning data, which is then optimized with [[Offline Reinforcement Learning]] (RL). This methodology differs from traditional [[RLHF]] approaches by focusing on multi-step processes rather than single-step interactions. The intricacies of this method lie in the collection of appropriate prompts, generation of effective reasoning steps, and accurate scoring of a large number of completions. | The practical application of the Q* hypothesis involves using PRMs to score ToT reasoning data, which is then optimized with [[Offline Reinforcement Learning]] (RL). This methodology differs from traditional [[RLHF]] approaches by focusing on multi-step processes rather than single-step interactions. The intricacies of this method lie in the collection of appropriate prompts, generation of effective reasoning steps, and accurate scoring of a large number of completions. | ||

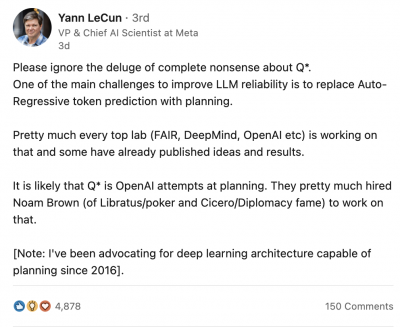

==Yann LeCun Response== | |||

[[File:q*_yann_lecun_response1.png|400px|right]] | |||

<pre> | |||

Please ignore the deluge of complete nonsense about Q*. | |||

One of the main challenges to improve LLM reliability is to replace Auto-Regressive token prediction with planning. | |||

Pretty much every top lab (FAIR, DeepMind, OpenAl etc) is working on that and some have already published ideas and results. | |||

It is likely that Q* is OpenAl attempts at planning. They pretty much hired Noam Brown (of Libratus/poker and Cicero/Diplomacy fame) to work on that. | |||

[Note: I've been advocating for deep learning architecture capable of planning since 2016]. | |||

</pre> | |||

==Comments== | |||

<comments/> | |||

==References== | |||

<references /> | |||

[[Category:Terms]] [[Category:Theories]] [[Category:Important]] | [[Category:Terms]] [[Category:Theories]] [[Category:Important]] | ||

Latest revision as of 07:08, 30 November 2023

What is Q* (pronounced Q-star) from OpenAI?

- It is the AI breakthrough that will lead to AGI (Artificial general intelligence)?

- Did it cause the board to OpenAI to fire it's CEO Sam Altman?

- Is it able to reason logically and solve math problems?

- Is it able to perform self-improvement?

Sam Altman's Response

Interview Question by Verge

The reports about the Q* model breakthrough that you all recently made, what’s going on there?

Altman Response

No particular comment on that unfortunate leak. But what we have been saying — two weeks ago, what we are saying today, what we’ve been saying a year ago, what we were saying earlier on — is that we expect progress in this technology to continue to be rapid, and also that we expect to continue to work very hard to figure out how to make it safe and beneficial. That’s why we got up every day before. That’s why we will get up every day in the future. I think we have been extraordinarily consistent on that.

Without commenting on any specific thing or project or whatever, we believe that progress is research. You can always hit a wall, but we expect that progress will continue to be significant. And we want to engage with the world about that and figure out how to make this as good as we possibly can.[1]

Theory

Introduction

The recent developments in artificial intelligence, specifically in the realm of machine learning and deep learning, have brought forth a new concept: the Q* hypothesis. This idea revolves around the integration of tree-of-thoughts reasoning, process reward models, and the innovative use of synthetic data to enhance machine learning models.

Background

The Q* hypothesis, pronounced as Q-Star, is a concept that emerged from the artificial intelligence research community, particularly from OpenAI. The idea is a hybrid of various methodologies in machine learning and artificial intelligence, such as Q-learning, A* search algorithm, and others. Q* is seen as a potential breakthrough in the quest for Artificial General Intelligence (AGI), which aims to create autonomous systems that can outperform humans in most economically valuable tasks.

Tree-of-Thoughts Reasoning

Concept and Implementation

Tree-of-thoughts reasoning (ToT) is a novel approach in language model prompting. It involves the creation of a tree of reasoning paths, which may converge to a correct answer. This method is a significant step in advancing the capabilities of language models. By breaking down reasoning into chunks and prompting the model to generate new reasoning steps, ToT facilitates a more structured and efficient problem-solving process.

Comparison with Other Methods

ToT stands out from other problem-solving techniques with language models due to its recursive nature. This approach is akin to the concerns of AI Safety regarding recursively self-improving models. The ToT method scores each vertex or node in the reasoning tree, allowing for a more nuanced evaluation of the reasoning process. This technique aligns with the principles of Reinforcement Learning from Human Feedback (RLHF), as it allows for scoring of individual steps rather than entire completions.

Process Reward Models (PRM)

PRMs represent a critical shift in the way reinforcement learning and human feedback are utilized in language models. Traditional methods score the entire response from a language model, but PRMs assign a score to each step of reasoning. This fine-grained approach facilitates better understanding and optimization of language models. PRMs have been a topic of interest in the AI research community, with their application being essential in advancing the field of language model reasoning, particularly in complex tasks like mathematical problem-solving.

Supercharging Synthetic Data

The use of synthetic data in AI research has been gaining traction. This method involves creating large datasets through process supervision or similar techniques. Synthetic data is pivotal in training AI models, as it provides a vast and diverse range of scenarios for the models to learn from. The use of synthetic data, coupled with the advancements in ToT and PRM, paves the way for more sophisticated and capable AI systems.

Q* Hypothesis in Practice

The practical application of the Q* hypothesis involves using PRMs to score ToT reasoning data, which is then optimized with Offline Reinforcement Learning (RL). This methodology differs from traditional RLHF approaches by focusing on multi-step processes rather than single-step interactions. The intricacies of this method lie in the collection of appropriate prompts, generation of effective reasoning steps, and accurate scoring of a large number of completions.

Yann LeCun Response

Please ignore the deluge of complete nonsense about Q*. One of the main challenges to improve LLM reliability is to replace Auto-Regressive token prediction with planning. Pretty much every top lab (FAIR, DeepMind, OpenAl etc) is working on that and some have already published ideas and results. It is likely that Q* is OpenAl attempts at planning. They pretty much hired Noam Brown (of Libratus/poker and Cicero/Diplomacy fame) to work on that. [Note: I've been advocating for deep learning architecture capable of planning since 2016].