DALL-E

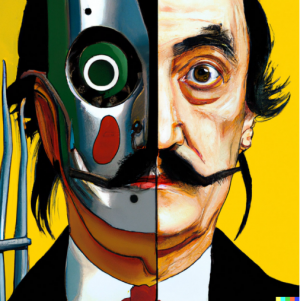

DALL-E is an artificial intelligence (AI) system developed by OpenAI than can create images from a text description (figure 1). [1] The AI system's name derives from a combination of Spanish surrealist artist Salvador Dali and WALL-E, the character from Pixar. [2] [3]

Its deep learning image synthesis model has been trained on a set of millions of images from the internet. By utilizing latent diffusion, a technique used to learn associations between words and images, it can generate images in different artistic styles following a user's natural language prompt (figure 1). According to OpenAI, diffusion "starts with a pattern of random dots and gradually alters that pattern towards an image when it recognizes specific aspects of that image." [1] [3] [4] The system is constantly updating its datasets in order to interpret correctly the prompts given, creating a set of images that correspond closely to the users' intent. [3]

According to Singh et al. (2021), DALL-E has shown "impressive ability to systematically generalize for zero-shot image generation. Trained with a dataset of text-image pairs, it can generate plausible images even from an unfamiliar text prompt such as “avocado chair” or “lettuce hedgehog”, a form of systematic generalization in the text-to-image domain." Zero-shot imagination, composing a new scene different from the training distribution, is an ability that is at the core of human intelligence. Nevertheless, in terms of compositionality, its something that can be expected from the AI system since the text prompt already provides a composable structure. [5]

DALL-E is a competitor to Midjourney.

Capabilities

DALL-E has the capability to create original artworks in different styles, which includes photorealism, paintings, and emoji. [2] It can also generate anthropomorphized versions of animals and objects and combining different concepts, styles and attributes in plausible ways. Another available capability of the system is to realistically edit existing images, adding or removing elements while maintaining a correct balance of shadows, reflection, and textures without explicit instructions. [1] [2] [6] According to Huang (2022), the AI "showed the ability to 'fill in the blanks' to infer appropriate details without specific prompts such as adding Christmas imagery to prompts commonly associated with the celebration, and appropriately-placed shadows to images that did not mention them." [2] Furthermore, DALL-E can expand images beyond is original canvas and create different variation from an original image. [2]

Huang (2022) has also noted that DALL-E can produce images from a wide spectrum of arbitrary descriptions from different viewpoints with a low rate of failure and that with its visual reasoning ability it can solve Raven's Matrices--visual tests to measure intelligence. [2]

However, the program is not without its limitations. For example, it has difficulties distinguishing "A yellow book and a red vase" from "A red book and a yellow vase." Also, asking for more than 3 objects, using negation, numbers, and connected sentences in the text prompt can lead to mistakes and features attributed to the wrong objects. [2]

Full usage rights

On July 20, 2022, OpenAI announced that the users would get full usage rights to commercialize the images created. This included the right to reprint, sell, and merchandise. However, OpenAI does not relinquish its right to commercialize images created by the users. [7]

DALL-E and CLIP

DALL-E was developed and announced at the same time with CLIP, a Contastive Language-Image Pretraining. These two models are different but correlated. CLIP, a zero-shot system, was trained with 400 million pairs of images "with text captions scraped from the internet". [2] [3]

Its relation to the image generation system is to understand and rank DALL-E's output by predicting which caption fits best for an image from a list of thousands of captions selected from a dataset. Therefore, CLIP creates text descriptions for images generated by DALL-E, which does the opposite—composing images from text, a method called unCLIP. [2] [3]

Safety features

OpenAI has continually worked on the safety and security of DALL-E to prevent abuses during image creation. The company has said that they've enhanced their "safety system, improving the text filters and tuning the automated detection and response system for content policy violations.” These safety features prevent users from creating violent or harmful content by removing it from the machine learning datasets. The same applies to text prompts. It also prevents deepfakes by not allowing the creation of photorealistic images of the faces of real people, including public figures. [3]

DALL-E 2 API

The second version of the software, DALL-E 2, is available as an API so developers can build the system into their apps, websites, and services. Microsoft has added it to Bing and Microsoft Edge with Image Creator tool. CALA, a fashion design app, is using the DALL-E 2 API, allowing its users to improve design ideas from text prompts. Mixtiles, a photo startup, is also already using the API in its own system for an artwork-creation flow to help users. [8]

Future applications

There are several applications of AI-generated images for content creation. For example, to use DALL-E to generate images of products not yet in existence or images that are too costly or difficult to photograph. [3]

In the future, users could use multiple AI tools, combining them to create fully animated art. Gil Perry, CEO of D-ID has said that "we’re seeing that people are layering different AI tools to produce even more creative content. An image of a person created on DALL-E can be animated and given voice using D-ID (AI-generated text-to-video). A landscape created in Dreamstudio can turn into an opening shot of a movie, accompanied by music composed on Jukebox." [3]

How it works

There are four key concepts that are at the base of how the current version of DALL-E works:

- CLIP: a model that takes image-caption pairs and creates representations in the form of vectors (text-to-image embeddings);

- Prior model: generates a CLIP image embedding from a CLIP text embedding;

- Decoder Diffusion model (unCLIP): Generates and image by convert the CLIP image embedding;

- DALL-E 2: prior plus diffusion decoder (unCLIP) models (figure 2). [3] [9]

Development

OpenAI started working on a text generator before DALL-E. In 2019, GPT-2 was created; with 1.5 billion parameters and trained on 8 million web pages, the model had the goal to predict the next word within a text. Its next version, GPT-3, would become the the preliminary model for DALL-E, changed to generate images instead of text. [2] [3]

On January 2021, DALL-E was introduced. A year later, on April 2022, OpenAI introduced DALL-E 2, an improved version of the AI software. [1] [2] [4] This new version entered public beta in July of the same year. [10] At the same time, a pricing model was instituted. [4]

In September 2022, OpenAI announced that the waitlist for DALL-E was removed. This way anyone could now sign up to the service and use it. [4]

Finally, in November 2022, the image-generating AI system was made available as an API. At that time, more than 3 million users were using the DALL-E, creating more than 4 million images per day. [8]

DALL-E 2

According to OpenAI, DALL-E 2 started as a research project and ended up as the new version of the system to generate original synthetic images from an input text. [1] [11] This new version has several improvements (figure 3) including higher resolution, greater comprehension and new capabilities like inPainting and Outpainting. [1] [3] The company also continues to invest in safety by improving the prevention of harmful generation and curbing misuse. [1]

DALL-E 2 uses 3.5 billion parameters, with an added 1.5 billion parameters to enhance resolution, while the first version used 12 billion. It generates images with four times better resolution and, overall, its creations are more realistic and accurate. [3] [9]

- Figure 3: Comparison of images generated on DALL-E 1 (left) and 2 (right). Source: OpenAI.

Inpainting and Outpainting

The second version of DALL-E has new functionalities like Inpainting and Outpainting. In the first one, generated or uploaded images can be edited by the program, adapting the new objects to the style present in that part of the image (figure 4); textures and reflections are updated according to the edits on the original image. [10] [9] Also, it can edit images of human faces. [12] The second feature, outpainting, allows users to expand the original image beyond its borders. It also takes into account existing shadows, reflections and textures while extending the borders of the original image with new content. The AI software can also create larger images in different aspect ratios. [3] [4] [10]

- Figure 4: Example of Inpainting with Corgi added to image. Source: OpenAI

Characteristics

Marcus et al. (2022) listed several observations about DALL-E 2 resulting from test done on the program.

- The images have great visual quality;

- The system is impressive regarding image generation;

- Aspects of the system's language abilities seem to be reliable;

- Compositionality seems to be a problem: results can be incomplete; relationships between entities are challenging; and anaphora may pose problems;

- Numbers can be poorly understood;

- Negation is problematic;

- Failure in common sense reasoning;

- Need for improvement in content filters;

- Generalization is difficult to assess.

Commercial service

DALL-E offers to new users 50 credits. These can be bought in froups of 115 for $15. [4] For the DALL-E 2 API the pricing varies by resolution: 1024x1024 images cost $0.02 per image; 512×512 images are $0.018; and 256×256 images cost $0.016. [8]

Concerns

The main concerns regarding the use of AI image generating software can be grouped into three main groups: artistic integrity, copyright issues, and violent images / deepfakes.

With new AI sytems capable of generating artwork form text prompts, some have suggested issues about artistic integrity; if anyone can type a text and generate a beautiful artwork or expand existing works with outpainting, there's implications for the future of art. However, the same technology allows for a new generation of artists that were held back by lack of time, no proper art education or physical disabilities. [10] [13]

OpenAI gave users commercial rights over their images, avoidign some tricky questions regarding intellectual property issues. Since the users only contribute with the text prompts and the images are machine-made, results are not likely to be copyrightable. [13] In August 2022, Getty images banned the upload and sale of images generated by DALL-E 2. Other sites like Newgrounds, PurplePort and FurAffinity did the same with the main issue being "unaddressed right issues" according to Getty Images CEO Craig Peters since the training datasets contain copyrighted images. Contrarily to this decision, Shuttertock announced that it would begin using DALL-E 2 for content. [8]

Regarding deepfakes, other image generating systems like Stable Diffusion are already being used to generate deepfakes of celebrities. [2] [12] OpenAI has a security system in place to block that type of content. It also bans "'political' content, along with content that is 'shocking,' 'sexual,' or 'hateful,' to name just a few of the company’s capacious categories of forbidden images." [13]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 OpenAI. DALL-E 2. OpenAI. https://openai.com/dall-e-2/

- ↑ 2.00 2.01 2.02 2.03 2.04 2.05 2.06 2.07 2.08 2.09 2.10 2.11 Huang, H (2022). Second version of Pixar character WALL-E & Salvador Dalí: Introduction. https://www.researchgate.net/publication/362427293_Second_Version_of_Pixar_character_WALL-E_Salvador_Dali_Introduction

- ↑ 3.00 3.01 3.02 3.03 3.04 3.05 3.06 3.07 3.08 3.09 3.10 3.11 3.12 Grimes, B (2022). What is DALL-E? How it works and how the system generates AI art. Interesting Engineering. https://interestingengineering.com/innovation/what-is-dall-e-how-it-works-and-how-the-system-generates-ai-art

- ↑ 4.0 4.1 4.2 4.3 4.4 4.5 Edwards, B (2022). DALL-E image generator is now open to everyone. Ars Technica. https://arstechnica.com/information-technology/2022/09/openai-image-generator-dall-e-now-available-without-waitlist/

- ↑ Singh, G, Deng, F and Ahn, S (2021). Illiterate DALL-E learns to compose. arXiv preprint arXiv:2110.11405

- ↑ OpenAI. DALL·E: Creating Images from Text. OpenAI. https://openai.com/blog/dall-e/

- ↑ OpenAI. DALL-E now available in beta. OpenAI. https://openai.com/blog/dall-e-now-available-in-beta/

- ↑ 8.0 8.1 8.2 8.3 Wiggers, K (2022). Now anyone can build apps that use DALL-E 2 to generate images. TechCrunch. https://techcrunch.com/2022/11/03/now-anyone-can-build-apps-that-use-dall-e-2-to-generate-images/

- ↑ 9.0 9.1 9.2 Romero, A (2022). DALL·E 2, explained: the promise and limitations of a revolutionary AI. Towards Data Science. https://towardsdatascience.com/dall-e-2-explained-the-promise-and-limitations-of-a-revolutionary-ai-3faf691be220

- ↑ 10.0 10.1 10.2 10.3 Gray, J (2022). OpenAI adds 'Outpainting' feature to its AI system, DALL-E, allowing users to make AI images of any size. Digital Photography Review. https://www.dpreview.com/news/8850471712/openai-adds-outpainting-feature-dall-e-allowing-users-to-make-ai-images-of-any-size

- ↑ Marcus, G, Davis, E and Aaronson, S (2022). A very preliminary analysis of DALL-E 2. arXiv preprint arXiv:2204.13807, 2022

- ↑ 12.0 12.1 Vincent, J (2022). OpenAI’s image generator DALL-E can now edit human faces. The Verge. https://www.theverge.com/2022/9/20/23362631/openai-dall-e-ai-art-generator-edit-realistic-faces-safety

- ↑ 13.0 13.1 13.2 Rizzo, J (2022). Who will own the art of the future? Wired. https://www.wired.com/story/openai-dalle-copyright-intellectual-property-art/