AI Anxiety

The term “Artificial Intelligence” (AI) anxiety describes the fear and trepidation about the development and increasing role of AI in daily life. As the technology advances, people are becoming anxious about its future consequences, especially relating to jobs. [1] [2] AI anxiety’s history dates back to the development of modern computers. During this period, people also had a sense of fear, seeing the new technology as threatening the idea of what it means to be human. [2] However, according to Li & Huang (2020), AI anxiety differs from computer anxiety. For example, a computer can perform human work, but it operates mechanically; AI can make autonomous decisions and operate independently from humans. Also, AI raises ethical issues between humans and machines, something that computer anxiety is not associated with. [3]

As people grapple with a high rate of change in the present and uncertainty about the future, this type of anxiety is becoming a recognized phenomenon. [4] With the accessibility of AI generative tools like ChatGPT and the proliferation of headlines about robots taking over jobs, some workers have reported anxiety about their future and questioned the relevancy of their skills in the future labour market. [5] Psychologically, this can be rooted in the sense that for many people, the job is more than just a means of making a living, being an essential part of their identity and providing a sense of purpose. [1]

The fear of the unknown as always been associated with technology. Regarding AI, the anxiety seems to come not only from the fear of mass unemplpoyment but also from concerns regarding machine intelligence, super-intelligence, and if the wrong people will have its power available to them. [6] For example, a survey conducted by Harris Poll and MITRE during February, 2023, found that 78 percent of Americans were concerned that AI could be used for malicious intent. [7]

According to Lemay et al. (2020), “Wang and Wang [8] situate AI anxiety with respect to technophobia which they define as an irrational fear of technology characterized by negative attitudes toward technology, anxiety about the future impacts of advancing technology, and self-admonishing beliefs about their ability. They divide AI anxiety in two aspects, computer anxiety and robot anxiety. They term AI anxiety as a distinct and independent variable. They define AI anxiety as ‘an overall, affective response of anxiety or fear that inhibits an individual from interacting with AI.’” [4]

Factors contributing to AI anxiety

Some studies have examined the causal factors for AI anxiety. Lemay et al. (2020) Studied the relationship between a person’s belief about AI and levels of anxiety relating to their technology-based predispositions. They concluded that “AI anxiety runs through a spectrum and is influenced by real, practical consequences of immediate effects of increased automation but also influenced by popular representations and discussions of the negative consequences of artificial general intelligence and killer robots.” [4] Here, the focus will be on several contributing factors from the research of Johnson & Verdicchio (2017) and Li & Huang (2020).

Johnson & Verdicchio (2017)

Sociotechnical blindness

Johnson & Verdicchio (2017) note that the expression of AI anxiety generally abstract AI technology out of the context of its use and existence. It ignores the human beings, social institutions and arrangements that provide AI with its functional capacity and meaning. Therefore, the programs’ behavior only has significance in relation to the tecnological systems that serve human purposes. [2]

They distinguish AI programs from AI sociotechnical systems, where programs are just lines of code and AI systems consist of code together with the context in which the code is used. So AI anxiety generally occurs has a result from a focus on AI programs, “leaving out of the picture the human beings and human behavior that create, deploy, maintain, and assign meaning to AI program operations.” Sociotechnical blindness leads to a lack of understanding that AI is a system that operates in conjunction with people and social institutions.” Therefore, according to the researchers, sociotechnical blindness enables the development of unrealistic scenarios because the human part of the overall system is left out. [2]

Confusion about autonomy

Thinking of AI as autonomous but not taking into account what counts as autonomy is another contributing factor for AI anxiety. The concept of autonomy is not the same when applied to humans compared to computational entities. In humans, this concept is associated with the capacity to make decisions, to choose, to act, which is connected to ideas regarding human freedom. Traditionally, these have been used to distinguish humans from both living and nonliving entities and serves as a basis for morality. As Johnson and Verdicchio (2017) mention, “Only beings with autonomy can be expected to conform their behavior to rules and laws.” [2]

The research authors mention that when non-experts hear about machine autonomy they attribute to it the same characteristic as what humans have, including the freedom to choose behavior. This comes into play into the concerns about “autonomous” AI. However, computational autonomy, where the programmer cannot know in advance the precise outcomes of the programs, is defined in terms of software and hardware processes, not directly correlating to human autonomy unless computer scientists accept computationalism. [2]

Technological development

Finally, the authors mention having an inaccurate conception of technological development as another contributor for AI anxiety. In this case, “Futuristic AI scenarios jump to the endpoint of a path of technological development without thinking carefully about the steps necessary in order to get to that endpoint.”

The developmental path that AI will take in the future is not clear, with each step requiring human decisions. As AI advances, human decision making might decrease but it will be part of the overall process in one way or another. Neglecting the role of humans in technological development leads to futurist centering their narratives around superintelligent AI that evolves into a dangerous entity apart. [2]

Li & Huang (2020)

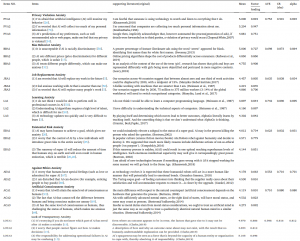

Li & Huang (2020) [3] identified eight anxieties that may contribute to the overall AI anxiety (figure 1).

- Privacy violation anxiety: it happens when users experience violations of privacy due to AI. For example, targeted advertising and face recognition can be performed by unsupervised AI.

- Bias behavior anxiety: it is caused by experiencing direct discrimination by AI. This can be due to AI adopting different strategies for different groups through data analysis and due to biased training datasets.

- Job replacement anxiety: it is caused by the observation of other people’s experiences or worry about being replaced by AI in a wide range of occupations.

- Learning anxiety: results from observing other people’s experiences or about learning AI. It can relate to a person’s lack of self-confidence in learning about artificial intelligence.

- Existential risk anxiety: the risk of AI causing the human survival potential to reduce.

- Ethics violation anxiety: caused by the potential of AI exhibiting behaviors contrary to rules of human ethics when interacting with humans.

- Artificial consciousness anxiety: the possibility that the particularity of human intelligence will be destroyed by AI.

- Lack of transparency anxiety: refers to the anxiety caused by the poorly understood AI decision making mechanisms. [3]

Signs of AI anxiety

Since the signs of AI anxiety can combine symptoms from occupational stress and general anxiety, it can be difficult to understand. However, there are common symptoms that can indicate this particular type of technological anxiety. [1]

- Frequently checking the news about AI and developments.

- Feeling anxiety or stress about the effect of AI on jobs and industries.

- Feeling overwhelmed by the fast pace of technological evolution.

- An obsession over the worst consequence scenarios regarding AI.

- Avoiding the use of technology, new devices, or software. [1]

Dealing with AI anxiety

There are several strategies to deal with AI anxiety. A good overall method is to realize that whenever there was a major change or shift due to technological development, humans have developed and adapted with it. [6] Other suggested measures are:

- Educating about AI: learning about its capabilities and limitations. [1] Also, recognizing how much AI is already part of day-to-day life in applications like Siri or Alexa. [7]

- Emphasizing identity: people can get a healthy sense of identity from their work, something that AI tools can take over leading to a loss of identity and a decrease in perceived value. Reminding people of their importance and contribution in the organization can counter-balance the negative feelings brought on by AI. [9]

- Developing skills: Since the AI tools can substitute some of the work, developing new skills and capabilities is essential since new opportunities will appear. Also, a person can focus on developing skills that are uniquely human (critical thinking, empathy, and empathy). [9]

- Embracing change: recognizing that change is inevitable can decrease the sense of fear from this area of technological development. [1]

- Building community: With the increased involvement of technology in work, people report lack of opportunities to bond with colleagues. Building a strong sense of community can improve social connections and provide a support group for any anxiety. [1] [9]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 Mind Help (2023) AI Anxiety: Why People Fear Losing Their Jobs To AI and ChatGPT? National Anxiety Month. Mind Help. https://mind.help/news/can-ai-anxiety-have-consequences-on-our-mental-health/

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 2.6 Johnson, DG and Verdicchio, M (2017). AI Anxiety. Journal of the Association for Information Science and Technology, 68(9): 2267-2270. DOI: 10.1002/asi.23867

- ↑ 3.0 3.1 3.2 Li, J and Huang, J-S (2020). Dimensions of Artificial Intelligence Anxiety Based on the Integrated Fear Acquisition Theory. Technology in Society, 63. DOI: 10.1016/j.techsoc.2020.101410

- ↑ 4.0 4.1 4.2 Lemay, DJ, Basnet, RB and Doleck, T (2020). Fearing the Robot Apocalypse: Correlates of AI Anxiety. International Journal of Learning Analytics and Artificial Intelligence for Education. 2(2): 24-33. DOI: 10.3991/ijai.v2i2.16759

- ↑ Cox, J (2023). AI anxiety: The Workers Who Fear Losing Their Jobs to Artificial Intelligence. BBC. https://www.bbc.com/worklife/article/20230418-ai-anxiety-artificial-intelligence-replace-jobs

- ↑ 6.0 6.1 Schmelzer, R (2019). Should We Be Afraid of AI? Forbes. https://www.forbes.com/sites/cognitiveworld/2019/10/31/should-we-be-afraid-of-ai/

- ↑ 7.0 7.1 Wijayaratne, S (2023). Are the Headlines About How AI Is Changing the World Stressing You Out? Everyday Health. https://www.everydayhealth.com/columns/my-health-story/are-the-headlines-about-ai-changing-the-world-stressing-you-out/

- ↑ Wang, Y-Y and Wang, Y-S (2019). Development and Validation of an Artificial Intelligence Anxiety Scale: An Initial Application in Predicting Motivated Learning Behavior. Interactive Learning Environments, 30(4): 619-634. DOI: 10.1080/10494820.2019.1674887

- ↑ 9.0 9.1 9.2 Brower, T (2023). People Fear Being Replaced By AI And ChatGPT: 3 Ways To Lead Well Amidst Anxiety. Forbes. https://www.forbes.com/sites/tracybrower/2023/03/05/people-fear-being-replaced-by-ai-and-chatgpt-3-ways-to-lead-well-amidst-anxiety