Feature vector

- See also: Machine learning terms

Introduction

Machine learning utilizes feature vectors, which are numerical values that describe an object or phenomenon. A feature vector may be defined as an n-dimensional array of numerical features representing a data point or example.

As an array of feature values that represent an example, feature vector is used in training the model and using the model to make predictions (inference).

Creating Feature Vectors

Feature vectors are created by extracting relevant features from raw data. Feature extraction involves selecting the most significant characteristics and representing them numerically. For instance, in image recognition, relevant features might include color, texture, and shape of an object in an image; similarly, in natural language processing these might include word or phrase frequencies within a document.

Once features have been identified, they are transformed into a vector of numerical values known as the feature vector. This feature vector can then be fed into various machine learning algorithms for input. Constructing such a feature vector often involves complex techniques like dimensionality reduction or feature scaling that require specialized expertise.

Example

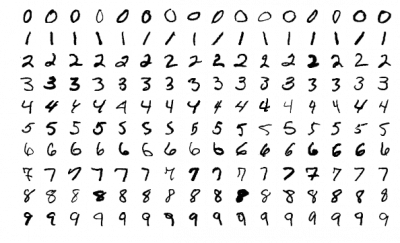

Let us assume we have a dataset of images of handwritten digits (0-9), and want to use machine learning algorithms to classify these images into their corresponding digits. In order to do this, we need to extract features from the images which can be utilized as features vectors for use by machine learning algorithms; one way of doing this is by converting the images into feature vectors.

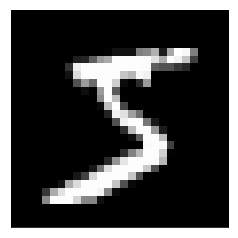

Take for instance this grayscale image of the numeral "5":

From this image we can deduce the following features:

- Mean intensity of pixels

- Standard deviation of pixel intensities

- Skewness of pixel intensities

- Kurtosis of pixel intensities

Our feature vector for this image would then be:

[Mean intensity, Standard deviation, Skewness and Kurtosis] = [123, 10, 0.5, 2.0]

This feature vector, consisting of 4 features, represents the image of digit "5". Machine learning algorithms can use this feature vector as input and train themselves to classify images based on these features.

Why are Feature Vectors Important?

Feature vectors are essential in representing complex data in an easily comprehendible form. Machine learning algorithms use feature vectors to quickly compare and manipulate data points, making it possible to perform various tasks such as classification, regression, and clustering more effectively.

Another noteworthy characteristic of feature vectors is their potential to be exploited through powerful mathematical techniques like linear algebra and calculus. These can be employed to transform and manipulate feature vectors in order to reveal hidden patterns and relationships within data, leading to new insights and the development of more precise machine learning models.

Explain Like I'm 5 (ELI5)

Imagine you have a collection of pictures featuring different fruits like apples, bananas and oranges. In order to teach a computer how to recognize these items, you would need to provide it with some information about each fruit - such as its color, size and shape.

A feature vector is like a comprehensive list of all the pertinent details you want your computer to know about each fruit, such as its shape. For instance, an apple's feature vector might look something like this:

[Rougeness, Roundness, Size and Sweetness]

Each item in the list identifies an apple by computer using its unique feature vector. Comparing apples to bananas or oranges, their feature vectors would differ since those fruits possess distinct traits.

By providing the computer with multiple feature vectors for different fruits, it can learn to distinguish each fruit based on its distinctive set of traits.